Much ado about intuition

Part 1: statistical learning, knowledge, concepts

Introduction: personality psychology and situationism

I suspect that the FFM [five factor model] here manifests a tension begotten on the sharp horns of a dilemma: It is difficult for any theory of personality (and, I have an ill-formed hunch, most any theory of anything) to simultaneously respect the demands of both unification and empirical adequacy. 1

I encountered this quote last month and, since then, in a fit of frequency bias fury, I’ve been thinking about just how widely applicable it is. Nontrivially, and most obviously, it's related to topics in the philosophy of science, like scientific explanation and theory choice. It weighs heavily on the epistemic validity of deep learning and how useful it may be in scientific discovery during the promised age of ‘big data’. But also, it threatens to besmirch intuitive accounts of concepts, intuitive (sometimes called folk) accounts of emotion, and, ipso facto, intuition itself. Additionally, I think that with all of its psychological import, you can find a correlative dilemma in the development of modern art, and, especially when considering ideas of ‘conceptual amelioration’, it may help explain some current political division, though these latter cases may prove to be reaching. I will spend most of this first article simply introducing the most vital ideas that will serve as a necessary backdrop for later discussion.

First - what does Doris mean by ‘unification’ and ‘empirical adequacy’? Explaining this requires an explanation of the source text. Ponder this: if you had to recommend a car mechanic to me, what would you tell me about your chosen mechanic to convince me to go to him? You would likely tell me he’s honest and fair. He’s a nice guy -- very friendly -- he won’t rip me off. And if you had to describe your significant other, would you use words like ‘compassionate’, ‘understanding’, or ‘supportive’? How about mythical figures like Odysseus? - ‘virtuous’, maybe. We intuitively, at least in the West, gravitate towards descriptions of character. Further, especially when we use lofty words like ‘virtuous’ and ‘respectable’, we seem to be ascribing traits. Moreover, we expect people with these traits to exhibit behaviors in line with them: we expect this to reliably be the case (one does not behave virtuously one week and then never again, and one does not behave virtuously at a restaurant but not at home) and we, presumably, expect that traits are evaluatively integrated (that is, we would find it extremely unlikely that one is both ‘virtuous’ and ‘dishonest’ or ‘greedy’). At least, this is the kind of view Doris argues against, and it is the kind of view Doris says certain people working in personality psychology and virtue ethics ascribe to. He calls this notion of character globalism, and here are the exact theses he claims they uphold:

Consistency. Character and personality traits are reliably manifested in trait-relevant behavior across a diversity of trait-relevant eliciting conditions that may vary widely in their conduciveness to the manifestation of the trait in question.

Stability. Character and personality traits are reliably manifested in trait-relevant behaviors over iterated trials of similar trait-relevant eliciting conditions.

Evaluative integration. In a given character or personality the occurrence of a trait with a particular evaluative valence is probabilistically related to the occurrence of other traits with similar evaluative valences. 2

Not to mince words - Doris claims this view, which the five factor model is implicated in, is empirically inadequate. He is backed by empirical evidence on behavior from situationist psychology. Purportedly, in hundreds of studies, the most important variables in predicting behavior have proven to be situational or contextual variables -- mood effects and group effects, not character traits. So, if poor Alice drops her papers next to the phone booth, whether you’ve found a dime in the coin return slot determines if you are likely to help (making it nearly certain, in one study). Etcetera - Milgram’s experiment (which has been globally reproduced) and the Stanford prison experiment are other famous studies where analysis shows situational variables overcoming self-reported trait implicated ones, much to the participants' chagrin. Thus, the situationist view has empirical validity.

But where’s the story here? Let’s say I buy it -- context is where predictive power lies -- is this useful for predicting behavior in the future? Presumably, to do so I’d need a gargantuan amount of data from experiments testing all kinds of imaginable situations that I could draw from. Remember - the point is not that failing to help poor Alice means everyone is actually self-concerned and cold. Rather, it’s that the situational variable was the correct predictor of behavior, not whether the phone caller was considered conscientious or not. Moreover, it’s implied that the prescription of globalist traits is something of a metalepsis - unjustified. What a globalist, personality psychology theory like the FFM does have is axiomatic paucity. With only a few measured variables I am presumably able to say something about your performance, behavior, chances of job success, etc. That’s a very appealing and unified explanatory structure. As for actual numbers, mean correlations between FFM and job proficiency, for example, lie around or below .3 (explaining less than 10% of the variance). What saves the day (according to the personality psychologists, who will probably for their own reasons, discussed below, downplay the importance of single item behavioral data) here are statistical aggregation methods, like the Spearman-Brown prophecy formula, which when applied to measured item-to-item mean correlations, like .23 for honesty and .14 for extraversion, result in stronger correlations of .86 and .81 respectively.3 Thus, the situationist view is empirically adequate as far as predicting single-trial behavior goes, but assumedly needs huge amounts of data to predict behavior in general, and the personality psychologist’s view displays uniformity and explanatory structure but only correlates well in the aggregate.

Clearly, I will not solve this. There are intuitive backings to each view. For the situationist - my day-to-day concern is presumably whether you’ll be ‘honest’ right now. I don’t care much if you’re honest on the aggregate. In fact, with newfound situational clarity, I may be wise to bribe your honesty with a fresh bakery scent or by offering you a gift. Similarly, when one hires a babysitter, they are banking on the fact that the babysitter will not abuse or neglect their children. They are not banking on the fact that the babysitter is, broadly speaking, virtuous. For the personality psychologist - the low mean correlation of 0.3 in single-trial behavioral measures says nothing about personality. It’s analogous to thinking you can tell something about a person’s intelligence from a single question on a test. Similarly, you wouldn’t say you ‘know’ someone after a single encounter with them. Plausibly, also, situationism is a bit self-undermining because if its main thesis is strictly true then any behavioral results are only relevant to the contexts from the experiments, which may appear ‘set up’, artificial, or deviant from the norm. And a situationist would need to explain why we have intuitive characterological concepts in the first place if they are not meaningful beyond a counterintuitive local trait sense. Let’s harp on this point - Doris himself advocates for local trait ascription, i.e, he thinks that within situationally similar events one may reliably act with trait implicated behavior and can thus be tagged as having said local traits. However, ‘situationally similar’ is vague, and it would have to be empirically verified what level of situational detail is necessary. When I recommend a car mechanic to you as fair it’s not the case that what I actually mean is that he’s Subaru-hatchback-brought-in-on-a-sunny-weekend-day-fair because he’s been fair whenever he’s fixed my Subaru hatchback on sunny weekend days (which they’ve all happened to be) and to say anything further would be moot extrapolation.

Where have I seen this before?: theories of visual perception

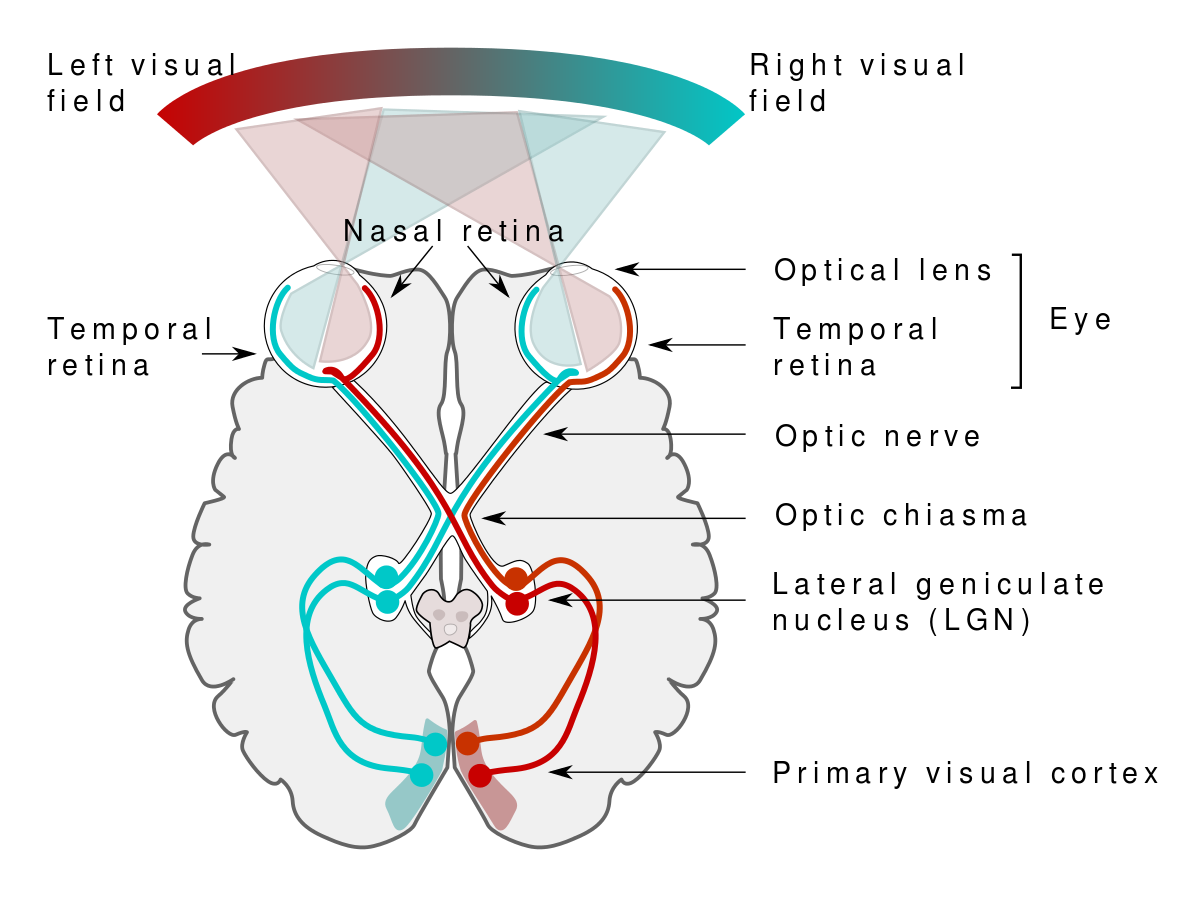

Above is a high-level diagram of the primary visual pathway (PVP). “Out there” is a photon flux which, when you look straight ahead, creates your visual field. Photons in the visual field hit photoreceptors on the back of the retina (rods and cones) which beget neural impulses. These neural signals undergo some sort of processing in the bipolar cells and amacrine cells which feed into the retinal ganglion cells. Then, the action potential (an electric potential in the membranes of the cells that rapidly rises and falls creating an impulse) of the retinal ganglion cells feeds through the optic nerve, which feeds into the lateral geniculate nucleus (LGN), which feeds into the visual cortex. The visual cortex is subdivided into V1, V2, …, V7. V1 is referred to as the primary visual cortex.

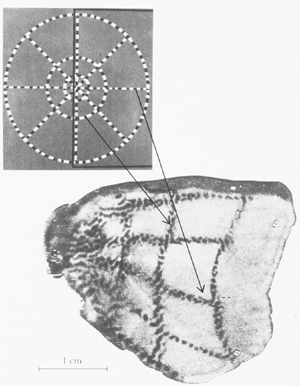

Some clever experiments have attempted to tease out how the functional structures of the PVP correspond to visual stimuli. For example, V1 has been shown to be retinotopic. Meaning, physical proximity in the visual field corresponds to physical proximity in V1. An anesthetized macaque monkey (macaques are usually used for studying neural mechanisms) was shown a blinking bullseye pattern and injected with radioactive glucose. The glucose would assumedly only be used by active cells which would metabolize it. The monkey was then ‘sacrificed’ and its flattened V1 was exposed to radioactively sensitive film. The result displays that some spatial aspects of the visual field line up with the spatial structure of V1:

Commonly, microelectrodes are used to measure the action potentials in retinal ganglion cells, LGN cells, and in V1. By varying the visual field (showing lines of different orientation, moving lines around, varying hue, etc), researchers have attempted to draw links between the information processing in the PVP and basic, psychologically observable properties. Hubel and Wiesel were particularly adept at this method and won Nobel prizes for their efforts, inspiring many others to follow suit. This is how some cells have been shown, for example, to display center-surround antagonism (also called the center-surround receptive field).4 Plausibly, this helps in detecting edges - center-surround receptive fields are a bit reminiscent of Sobel filters. So, assumedly, with enough effort, we could exhaustively explain how the PVP enables the detection of real-world features via analysis of neural signals. It is not that simple.

It’s particularly interesting to read how this has played out in the empirical investigation of hue perception and color opponency (an observed psychophysical phenomenon of color). To summarize for the curious but vacillating: LGN has nice groups of cone opponent cells, but it’s not exactly like Herring (the guy who proposed opponency theory) predicted. With something like complementary colors, you get a center-surround receptive field in LGN cells. However, in V1 you can measure all kinds of different receptive fields, and the signals from those aforementioned LGN groups are further combined in different ways, even early in the PVP processing. So, opponent theory (roughly the psychophysical idea that two pairs of colors, red/green and yellow/blue, are opposites and form ‘color opponent axes’ by which other colors are measured) does seem to play a role in unique hue perception, but the mechanism is not that any individual cell’s response determines the perceived hue. For example, you can divide the visual field into two halves vertically with medium wavelengths and long wavelengths. Then, the excitation of the cell is actually less than if the visual field was monochromatic, implying edge detection with color contrast is not well explained by any individual cell’s singular opponent receptive field. Some V1 cells do exhibit double opponent receptive fields (i.e. having two opponent, spatially separate sub-regions in the visual field, one stimulated by medium wavelength light and inhibited by long wavelength light, and vice versa) which give some orientation and color contrast information. But the mechanisms, altogether, are unclear and probably involve ensembles of cells and complicated higher-order processing. There is hope that new microelectrode arrays that enable measuring many cells’ responses at once will help solve the puzzle.

Dale Purves argues against this paradigm insofar as it attempts to explain vision perception in terms of directly and formulaically picking out real-world properties.5 Here’s why: first, even in terms of projecting 3d space onto a 2d retinal image, many physical features (position, orientation, size, etc.) are conflated in the process. Second, carefully documented illusions (Hering curvature, Poggendorff, Müller-Lyer, among dozens of others) are not the odd exceptions but rather the profound rules. Meaning, they are not ‘illusions’ but rather they just illuminate how our visual perception works. Any theory of perception worth its salt should explain them just as well as it explains normal ‘veridical’ experience (experience phenomenally matching physical measurements). His alternate empirical proposal is simply that visual perception is meant to beget behaviors that make survival and reproduction more likely. Neural configurations that allow organisms to navigate common, frequently occurring visual scenarios are going to propagate forward via natural selection. In a sense, this is supposed to get around the fact that retinal experience does not (and likely cannot) allow the unambiguous specification of real-world properties. More than a ‘just so’ story, Purves et al. have tested their hypothesis against empirical data. In each experiment, one would expect that empirical frequency in natural scenes should correspond in some meaningful way with perceptual discriminatory ability, and this is what they’ve found. For example, in the psychophysical literature, perceived line length has been shown to depend on line orientation. Horizontal lines are correctly estimated while vertical lines are overestimated, with the peak overestimation at about 25-30 degrees off the vertical axis in either direction. The percentile ranking of lines of various orientations in a natural scene captured by laser range scanning matches this psychophysical result.6

So?:

It’s probably not clear what I’m getting at. What I’m interested in here is Dale Purves’ hypothesis of empiricism (via cumulative human experience) in contrast to the physiological approach which is starting from action potentials in cells and trying to work its way up to full explanations of visual perception. Reading about the necessity of microelectrode arrays and “higher-order processing”, which is puzzlingly implicated even early on in the PVP signal chain, reminds me of the problem of uniformity vs empirical adequacy in personality psychology. That is, presumably ensembles of cell responses combine and interact in ways that determine our visual experience. But how they do so may be inscrutable, similar to how parameters in neural nets appear meaningless upon inspection. Similarly, I can imagine a deep learning model being tasked to predict behavior given some sort of constraints and a set of possible results. But again, whatever variables it grabs onto as determinative or predictive may be meaningless to us -- perhaps they won’t be as fine-grained as fundamental physical properties (though reductively tracking all low-level fundamental physical events and using physical reasoning may get us to the predictive results we crave, at least, according to the physicalist), but they also won’t be nearly as coarse as personality traits either. So, the models will likely be empirically accurate, but they won’t explain. Does that matter?

A quick aside on knowledge:

Knowledge is usually introduced as ‘justified true belief’7, though this definition comes from a Socratic dialogue in which it is thrown out after some conceptual analysis between Socrates and his interlocutor Theaetetus. A famous set of counterexamples called the Gettier cases have cast some doubts on it. Even more simply, one can imagine the case of using a broken clock as evidence for deducing what time is. If you, totally unaware of the clock’s broken status, look at the clock exactly when the displayed time matches the real time (say, 12:30), simply by chance, you would assumedly believe the time was 12:30, and you would be justified in believing you know the time is 12:30 since you just examined a clock, and it would be true that the displayed time is the actual time (it is really 12:30). Thus, according to the ‘justified true belief’ account, you have knowledge of the time. However, since you reached this conclusion by a wild stroke of luck, we’re reluctant to call this knowledge. Regardless, knowledge as ‘justified true belief’ does have an air of plausibility. If I know something, that implies that I believe it. If it’s false, then it can’t count as knowledge. And if I have no justification, meaning that a belief I hold is true merely by chance or luck, that would not seem to count as knowledge either. So, philosophers have taken up ameliorating ‘justified true belief’ to further clarify what counts as justification, in a sense arriving at something like ‘knowledge is justified true belief sans Gettier cases’.

Still, to me, knowledge is not clearly one genus. Some have drawn the distinction between knowing that and knowing how8. This is actually an ancient Hindu distinction between vijnana (book smarts, theoretical knowledge, dualistic/dividing knowledge) and jnana (experiential knowledge, practical spiritual knowledge of God).9 Compare knowing about fire (ajnana - ignorance), having seen fire and knowing a thing or two thermodynamics (vijnana), and being able to make a fire and cook on it (jnana). Another distinction touching on the earlier discussion on personality -- what account of knowledge am I appealing to when, if you ask if I know your friend John, I reply “Yes, I know John”?

Perhaps knowledge as ‘justified true belief’ holds the most weight in terms of knowing facts. For the purposes of this article, I will assume that’s the case. So, knowledge for our purposes is an intensional propositional attitude of the form ‘I know (that) p’, where p is some proposition. By intensional, I mean that it might be true that ‘Bernard knows that Joe Biden has a stutter’ but not that ‘Bernard knows that the 47th Vice President of the United States has a stutter’, in case he’s not aware of the referent in the latter case, and as such does not believe the latter case.

Back to deep learning:

Along the lines of models being empirically accurate but not explaining, I’d like to sketch out a thought experiment. First, note that unless the proposition or fact we’re considering is clearly a priori (provably true or false sans any empirical wherewithal), or perhaps more strictly analytic a priori, it should originate in some empirical source. Examples include perception, memory, introspection, and testimony. One can easily question the reliability of any of these, especially when we propose them as sources of knowledge. However, it may also be absurd to exclude any of them. Consider testimony. One’s own empirical experience is not enough to justify the expanse of things one considers known via second-hand testimony. We can demarcate between reliable or trustworthy and unreliable or untrustworthy sources of testimony, but what makes that reasonable? If someone utters “p”, and they’re totally reliable in terms of ascertaining “p”, but we don’t know that person, wouldn’t we reasonably refuse to use their testimony to claim we know that p? And, on the other hand, can we really say we have enough evidence and experience with all of our testimonial sources to reliably attribute them as trustworthy sources of knowledge?10 One may appeal to methodology in the case of scientific testimony, implying that scientific testimony is especially trustworthy. However, one can also make a Thomas Kuhn-esque inductive observation that the basic theoretical tenets of successive scientific theories are incommensurable (contradictory), and if the future is like the past, we can reliably bet on new theories superseding our current ones at some point, so these basic tenets are in some sense unreliable sources of knowledge. Ponderously, our basic moral intuitions have changed less in the past millennia than our scientific intuitions, though we might be more inclined to see the former as more up in the air.

Now, onto the thought experiment. Consider a deep learning model tasked to classify species of birds given input audio and video (or just a picture). Suppose also you know someone who is an expert bird watcher. Let’s suppose also that the deep learning model has trillions of parameters and has inductively proven to be extremely accurate - perhaps it is correct over 99.9% of the time. That is, whatever model the deep learning algorithm picked out has generalized unusually well. Can we use the model as a source of knowledge? That is, can we say ‘I know that the bird over on that tree is a mountain chickadee’ because the model said so? Assume it’s true that the bird is a mountain chickadee. It seems to me that perhaps I have no better reason to trust the bird watcher than the model. Haven’t they both learned from empirical sources? In fact, if the bird watcher disagreed with the model, I might be skeptical of the bird watcher, but let's assume she also independently determines the bird to be a mountain chickadee. In trusting her testimony, we might find it appealing that the bird watcher displays discriminatory abilities like ours. When asked why the bird is a mountain chickadee, the bird watcher might introspect and explain that the bird's face has a pattern like such and such, and the bird’s song has such and such quality. Ergo, the bird is a mountain chickadee. This ‘justification’ step presumably turns true belief into knowledge. But I’m unconvinced this gives us good reason to prefer the bird watcher’s testimony, given the model’s previously acknowledged minuscule error rate which implies that it picks out a highly generalizable and non-trivial hypothesis. I can think of two possible reasons that could support this, both admittedly needing much elaboration and evidence. First, which I just mentioned, is that the hypothetical deep learning model is highly generalizable. Concepts may themselves be functional kinds implicated in finding regularities and explaining. That is, they may serve as determinative variables. If this is the case, the fact that a machine epistemology has different determinative variables may not be so concerning. In fact, since “to err is human”, we may prefer the machine’s testimony. Second, I’m not certain that this modus ponens type reasoning (perceiving face structure A and song type B implies mountain chickadee AND A and B were perceived THEREFORE mountain chickadee) actually occurred when it intuitively seemed to the bird watcher that the bird was a mountain chickadee. Rather, perhaps these higher-level reasons came to be when we asked the bird watcher to introspect and linguistically report convincing, explanatory reasoning to us. Or, more minimally committing, these variables may have only been a few among many others, all of which, though less linguistically explicable, were necessary for producing the conclusion of it seeming to the bird watcher that the bird is a mountain chickadee. My evidence for this is philosophy’s own failure to report necessary and sufficient conditions for anything, despite spending millennia trying. There’s likely more going on than what a few linguistic premises can capture. Perhaps the intuitive seeming pertains to more etiologically inscrutable variables and is not all that different from the deep learning model, insofar as the fact that the model’s parameters are seen as ‘meaningless’, and this is seen as an epistemic limitation. However, when contemporary scientific practice largely teases out statistical/causal relations (like ‘smoking causes cancer’) rather than lawlike explanans/explanandum relations, trusting statistical models might be less of a geist shift than we think. I will spend the next article exploring these two points in more detail. For now, I lead with a question: is ‘justification’ something of a vague necessity for knowledge? Or, at least for some kinds of knowledge (if knowledge is somehow pluralistic), is it acceptable to lack explanatory power when empirical accuracy is gained?

Those of us privy to the inner workings of deep learning might be a bit more skeptical. Is doing gradient descent on some error function, like neural nets do, really going to approximate or meaningfully supersede our actual reasoning, whether or not the trained models have as many parameters as there are synapses in the human brain, like OpenAI GPT-4 will have? Additionally, some models are prone to adversarial attacks. Adversarial attacks clearly display how, in classification problems, machine learning models pick out categories with certain determinative variables for classification such that, while they probabilistically tend to work, they sure don’t match ours. This is reason enough, if any, to be dismissive. Still others, just as privy, would know that how machine learning works in practice is almost always with supervision, though companies would love to avoid it, and are trying to push towards unsupervised and partially supervised reinforcement learning. That is, companies like Amazon collect user data and hire thousands of data annotators to go through and label it all to improve and extend the models. This can, for example, reduce error rates for Alexa’s speech-to-text, or bring Alexa to a new language. This is why speech-to-text (used also by Google, Apple, etc.) accuracy improved so rapidly over the past couple of years. Other companies will label themselves as machine learning enterprises but hire workers to verify data results before they become customer facing. Then, they will mine their workers' decisions to improve their models, eventually (so they hope) eliminating the need for verification. Isn’t this essentially farming their workers’ trained intuitions? Meaning, if we trusted the trained intuitions in the first place, why not the model in the end? And then again, whether the model is supervised or unsupervised, why should we trust or favor our concepts and determinative variables at all, even in the case of categorical mismatch? Maybe they just suck. What if, like our characterological concepts, they’re empirically inadequate, and perhaps, if we’re to believe Doris, in need of replacement? This is farcical in terms of trusting a model that miscategorizes a panda as a gibbon after adding a nearly transparent amount of noise to the input image -- clearly, this model is reaching an unsatisfactory local minimum -- but we may one day face more subtle cases, and we may want to ask what degree of categorical mismatch is acceptable enough to still consider the model reliable.

Summary:

In part one, I introduced two disparate sets of problems where the empirical evidence is pointing towards large scientific models that may actually just resemble deep learning insofar as the determinative, predictive variables of the models may not mean much to us (that is, they lack uniformity). First, situationist psychology implicated local traits tied to local situational variables as the main explanatory predictors of behavior. Just how fine-grained these local situational variables must be is vague. Interestingly, in this case, the situationist view bumps up against our intuitive concepts of character, which we would introspectively implicate in determining or explaining behavior, and which have the benefit of being uniform. Second, the race to explain visual perception from physiological measurements is pointing towards ‘higher-order processing’ ordained by microelectrode arrays. Though we lack intuitive concepts of perception to bump up against, Purves’ empiricist hypothesis provides a uniformity displaying, ‘higher level’ explanan by appealing to natural selection and abandoning assumptions of direct realism. Further, the empiricist hypothesis paves the way for the comparison between deep learning models and visual perception by empirically demonstrating multiple links between frequency of occurrence in nature and ‘illusions’ - i.e., discrepancies between subjectively reported visual properties and physical measurements. That is, statistical models of perception may adduce this corroborating link.

In the next part, I’d like to introduce a relevant classical paradox and spend some time building philosophical machinery in order to talk more clearly about intuition, perception, and explanation.

References

Doris, J. M. (2002). Lack of Character: Personality and Moral Behavior. Cambridge University Press.

Easwaran, E. (1985). The Bhagavad Gita. The Blue Mountain Center of Meditation.

New York University. (2006). Perception Lecture Notes: Retinal Ganglion Cells.

Purves, D., Morgenstern, Y., & Wojtach, W. T. (2015). Perception and Reality: Why a Wholly Empirical Paradigm is Needed to Understand Vision. Frontiers in Systems Neuroscience, 9(November).

Steup, M., & Neta, R. (2020). Epistemology. The Stanford Encyclopedia of Philosophy. (Doris, 2002, 69)↩︎ (Doris, 2002, 22)↩︎ (Doris, 2002, 72)↩︎ (New York University, 2006) - roughly, the firing rates of action potentials in retinal ganglion cells and LGN cells are affected by stimuli in the visual field. You can measure this firing rate while, for example, adjusting the position of white light in the visual field. Some cells are ON center OFF surround, while others are OFF center ON surround. The former cells will exhibit an excitatory response in firing rate when the small spot of light is in the center of the visual field but an inhibitory response when the light is located in a surrounding annulus, and vice versa.↩︎ (Purves et al., 2015)↩︎ (Purves et al., 2011)↩︎ (Steup & Neta, 2020)↩︎ (Steup & Neta, 2020)↩︎ (Easwaran, 1985, 147)↩︎ (Steup & Neta, 2020)↩︎